February 17, 2026 • 8 min read

What is AI governance, and why does it matter?

Guru Sethupathy

Organizations are integrating AI systems across decision-making, operations, and customer-facing tools — and the benefits are real. But so, too, are the risks. That’s where AI governance comes in. AI governance refers to the set of policies, processes, roles, and tools that help organizations manage AI risks, ensure its responsible use, and comply with current regulations.

Still, AI governance is new and often confused with other disciplines such as data governance, machine learning operations (MLOps), or Infosec. That confusion often leads to gaps in oversight or a false sense of confidence. For teams building or deploying AI, let’s explore what matters and what doesn’t when formalizing an AI governance program.

Why care about AI governance now?

While AI governance is still in its infancy, it’s imperative for organizations to care about it today for a number of critical reasons:

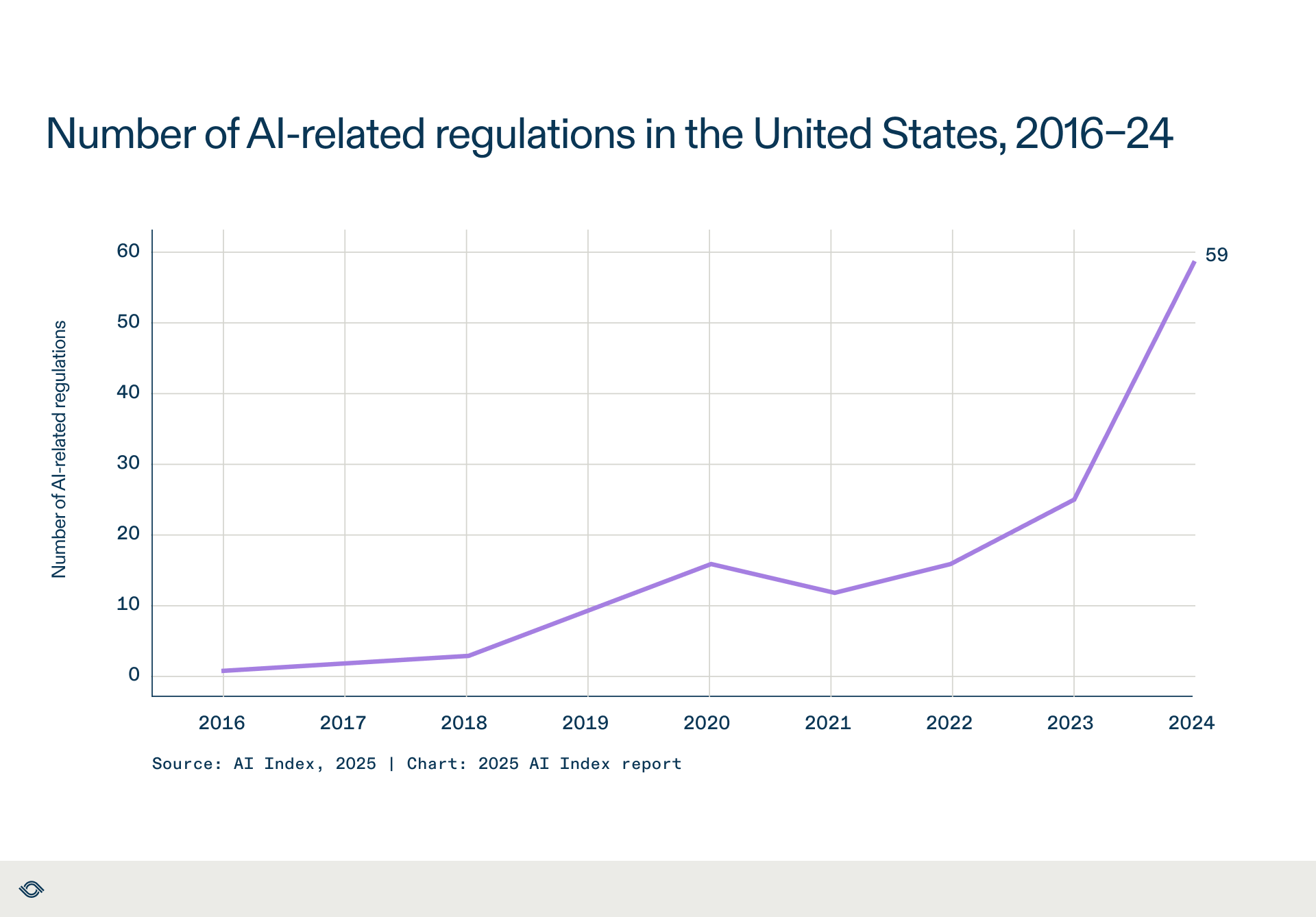

1. AI regulations are beginning to take effect: AI-specific laws are popping up across jurisdictions. The EU AI Act, in particular, creates new requirements for documentation, transparency, and risk management. Many of these laws take effect in 2026.

AI failures can be expensive—and hard to spot: Models can behave in unexpected ways, especially when built on third-party APIs. Many new AI offerings on the market aren’t transparent. Without oversight, teams may not notice until there’s a headline or a compliance issue.

2. Customers are skeptical of new AI systems: buyers and users want to know they are safe. That’s not something you can prove after the fact. You need systems in place to show how your AI works — and to demonstrate how you govern it.

What are AI governance examples?

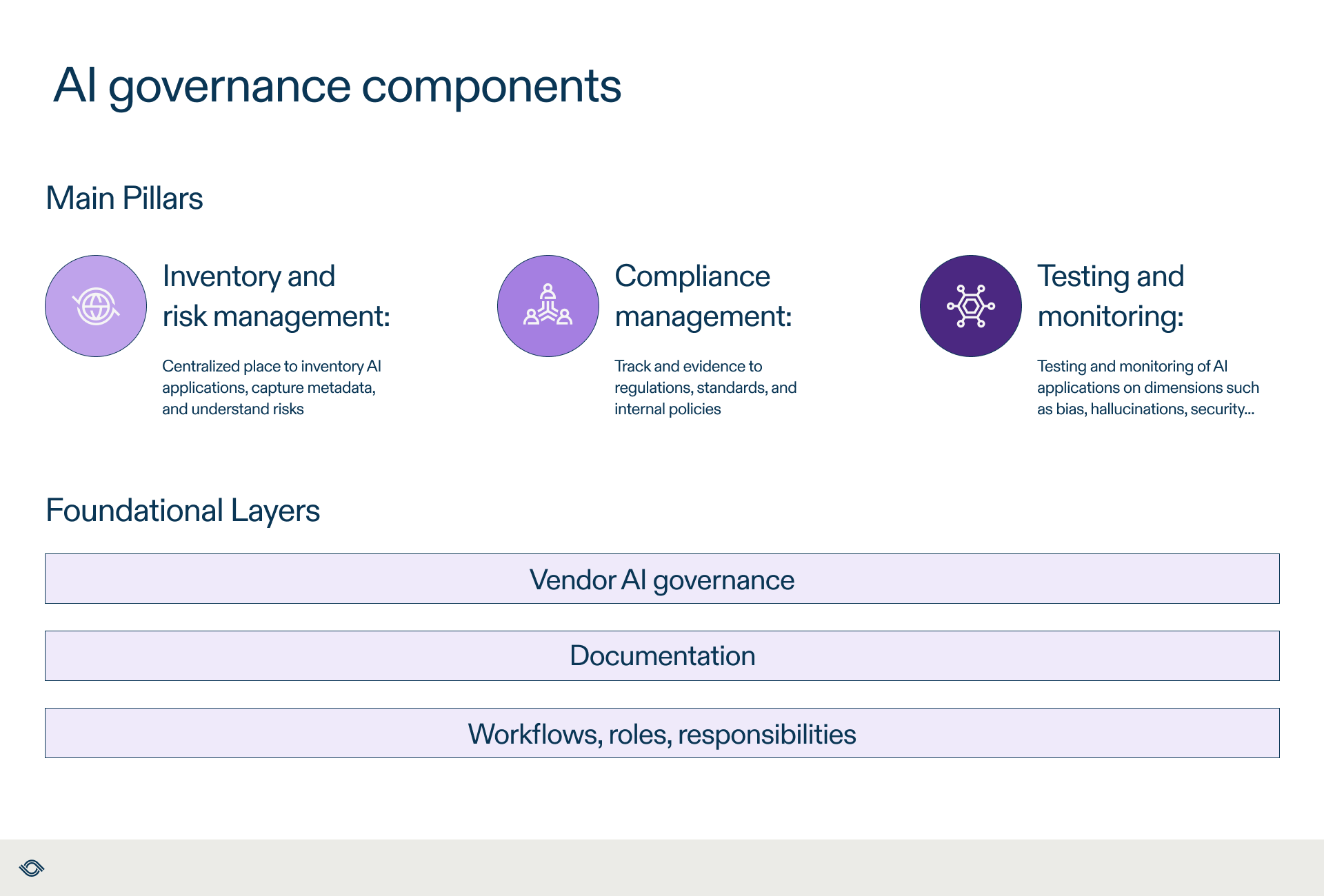

AI governance — done well — helps organizations manage risk and compliance obligations for each of their AI systems. AI governance processes help builders, deployers, and impacted populations ensure 1) the AI that they design or use is safe, and 2) monitor so that they can flag ongoing issues and make adjustments over time. They involve:

- Knowing what AI you’re using and how you are using it

- Understanding where the risks are — legal, operational, reputational, or otherwise—and applying appropriate steps to mitigate them

- Making sure to test and monitor systems appropriately

- Keeping documentation that shows you’re in control

Without AI governance processes in place, organizations risk discovering issues with their AI systems only after something harmful to the business has occurred.

Who is responsible for AI governance?

AI governance is inherently cross-functional. But without a clear owner, it often gets lost between teams.

Most organizations appoint a dedicated AI governance lead who often sits in risk, data, engineering, or compliance. This leader will often have experience in similar governance domains, such as InfoSec or data privacy. An AI governance lead, sometimes called the head of AI governance, is responsible for several activities, such as:

- Setting the governance agenda at the enterprise level

- Defining the policies and procedures required to implement the organization’s AI governance agenda

- Coordinating stakeholders across different teams (e.g., line of business, data science, legal, data privacy, InfoSec)

- Tracking AI risks and monitoring their resolution across a portfolio of vendor and internally developed AI

- Managing the organization’s alignment with key AI governance standards like NIST AI RMF or ISO 42001

- Assessing the effectiveness of the organization’s AI governance and making improvements as needed

Without clear leadership and defined accountability, AI governance efforts can become fragmented or reactive, leading to inconsistent risk oversight and compliance gaps.

How to match AI governance features to your organization’s needs

Choosing the right AI governance tools isn’t a one-size-fits-all approach. It depends on your specific context. Focus on these four main factors to identify which capabilities matter most.

1. AI use case complexity

- Internal tools / low risk: Basic inventory and policy alignment are enough.

- Customer-facing or sensitive: Add monitoring, transparency, and documentation.

- Highly regulated or high-impact (e.g., hiring, credit): Full risk assessments, compliance workflows, and audit support are essential.

2. Regulatory environment

- Heavy regulation (e.g., EU AI Act, healthcare, finance): You need platforms that map controls directly to laws and support conformity assessments.

- Lighter or evolving regulation: Focus on flexible risk frameworks and policy enforcement tools.

3. Organizational scale and maturity

- Early-stage / small AI footprint: Tools with templates and guided onboarding to help build processes.

- Growing/multi-team setups: Workflow management, role-based access, and integration with existing systems matter.

- Large/global enterprises: Require advanced compliance tracking, evidence management, and audit reporting capabilities.

4. Source of AI systems

- Mostly in-house: Emphasize testing, lifecycle monitoring, and developer collaboration.

- Mostly vendor-provided: Focus on vendor risk assessments, contract compliance, and usage monitoring.

- Mixed environments: Look for platforms flexible enough to handle both.

5 recommendations for getting started with AI governance

You don’t need to solve everything on day one. Start with structure, and grow from there:

- Build a basic AI inventory that tracks sufficient metadata to properly assess the risk profile and benefits of each AI use case.

- Appoint a governance lead (with backing from leadership) who is responsible for setting an organization’s AI governance agenda and the policies and procedures implementing it.

- Define a few initial policies, such as acceptable use, high-risk criteria, review points, and criteria.

- Pilot a simple tool, even if it’s just a spreadsheet, to start.

- Train key teams on what AI governance entails and their role in it.

AI governance isn’t just about protecting against something going wrong. Done well, it also helps teams move faster — by creating clarity around roles, standards, and acceptable use, and by assuring that employees and teams can use AI safely.

About the authors

Guru Sethupathy is the VP of AI Governance at AuditBoard. Previously, he was the founder and CEO of FairNow (now part of AuditBoard), a governance platform that simplifies AI governance through automation and intelligent and precise compliance guidance, helping customers manage risks and build trust and adoption in their AI investments. Prior to founding FairNow, Guru served as an SVP at Capital One, where he led teams in building AI technologies and solutions while managing risk and governance.

You may also like to read

What is a model card report? Your guide to responsible AI

GRC survival guide: Thriving in the era of AI SaaS

An executive’s guide to the risks of Large Language Models (LLMs)

What is a model card report? Your guide to responsible AI

GRC survival guide: Thriving in the era of AI SaaS

Discover why industry leaders choose AuditBoard

SCHEDULE A DEMO