October 26, 2023 • 8 min read

An AI-Powered Call to Action for Internal Audit

Richard Chambers & Anand Bhakta

It’s been under a year since OpenAI launched ChatGPT in November 2022. In that brief time, the rapid adoption of generative artificial intelligence (AI) across industries, regions, and demographics has been remarkable. An April 2023 McKinsey Global Survey — only six months in — found 79% of respondents reporting exposure to generative AI, 22% regularly using it in their work, and 33% whose organizations were using it in at least one function.

Generative AI has hit critical mass at a pace unmatched by any technology in history. Indeed, its entire life cycle has proceeded at warp speed, with regulatory activity (e.g., EU’s AI Act; calls for US oversight) and academic studies on the impacts are well underway. But in most organizations, AI risk management and governance are not keeping pace.

With risks emerging and changing faster than ever, internal audit is uniquely positioned to offer insight and foresight. So why are so few internal auditors taking bold, decisive action to help their organizations understand, use, govern, and gain assurance on generative AI? Amid a world racing to embrace AI, are internal auditors at risk of becoming laggards? What’s behind the lack of action, and how can we fix it?

AI Use and Governance: Current State

Many organizations are diving headfirst on AI, but few seem prepared to govern its use or manage its risks. McKinsey’s study found that 55% of all organizations have adopted some form of AI, and leading organizations are going all-in on generative AI. But only 21% have policies governing employee use of generative AI, and most aren’t working to mitigate top generative-AI-related risks. For example, only 38% of organizations are mitigating related cybersecurity risk, 32% inaccuracy, 25% IP infringement, 20% personal/individual privacy, 16% organizational reputation, and 13% workforce/labor displacement.

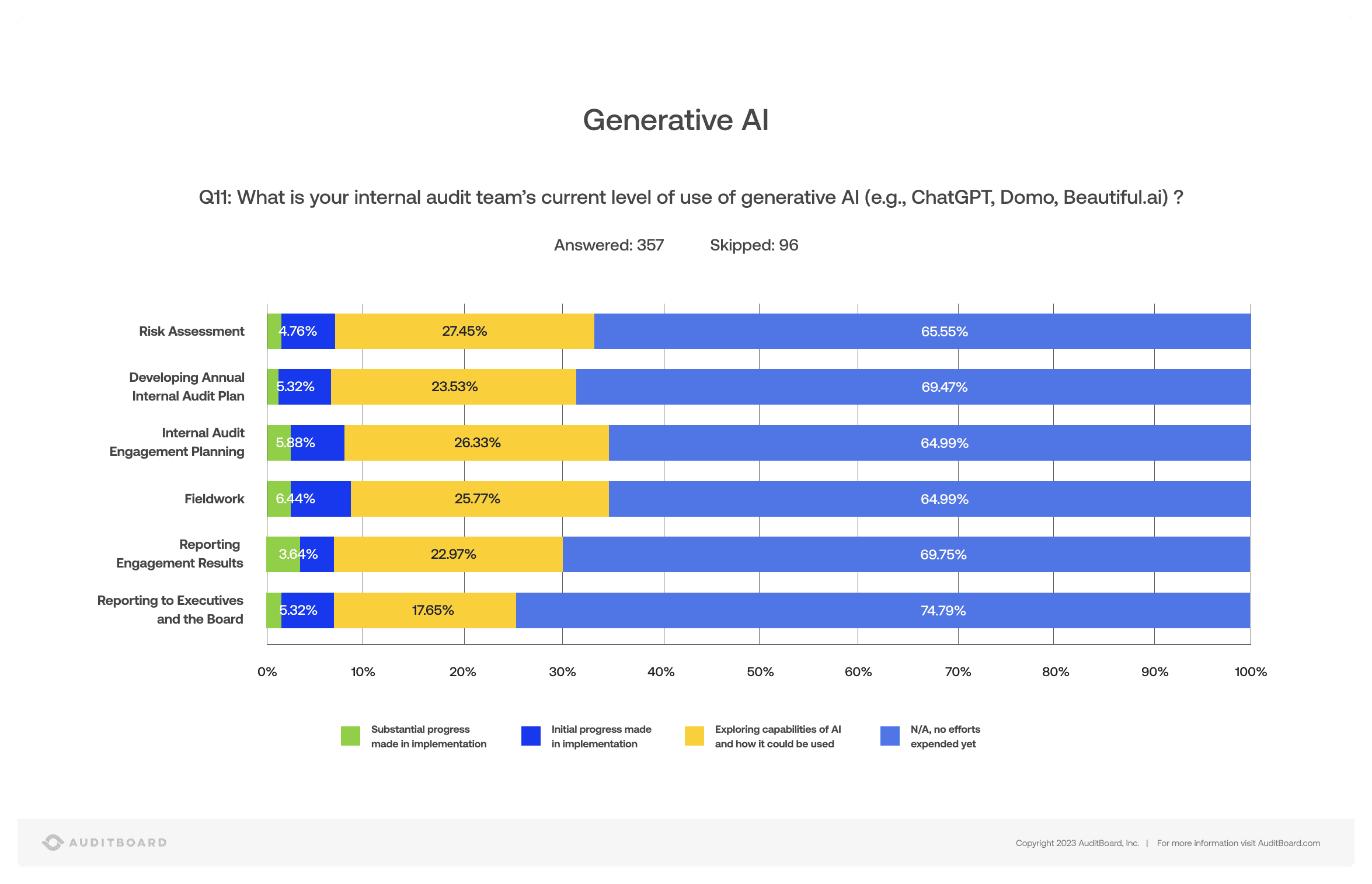

AuditBoard’s pending 2024 Focus on the Future survey, conducted August/September 2023 (coming out in November), offers a somber echo of McKinsey’s April findings. Only one in four internal audit leaders said their organizations have defined the risks of, or created guidelines for, its use of AI, and only two in five had a clear understanding of how AI is being used in their organizations. At most, 10% of internal auditors are using generative AI in any way in their own work, and 50–75% have not made any efforts to explore or implement generative AI in internal audit.

A Useful Analogy: Cloud Computing

Many internal auditors are interested and engaged with generative AI. So what explains the lagging use and focus? In part, lack of expertise and resources: A 2022 AuditBoard-Chartered IIA report found that 49% of internal audit leaders cited “lack of skills and competencies” as the top barrier to internal audit adoption of data analytics and AI, followed by 24% citing “cost/lack of resources.” But we also suspect a general discomfort and pressing need for assurance.

Such was the case in cloud computing’s early days. Initially, many regulators — especially in financial services — viewed it as unproven and risky, and banks were slow to adopt. Cloud computing needed to provide a level of assurance around its security, stability, and integrity. Internal audit needed to learn about and validate cloud capabilities and safeguards. Today, cloud is an acknowledged competitive advantage embraced by both banks and regulators.

A similar pattern may be emerging with AI. Businesses rightly worry about accuracy, reliability, security, and more. But discomfort and inexperience shouldn’t keep internal audit from taking action. Just as AI technologies must step up to provide a level of assurance, internal audit must also step up. We must educate ourselves, our leaders, and our boards on AI’s threats and opportunities, and be bold in helping to determine how best to set up AI processes, controls, and governance.

Guidance is available, and more will emerge. The IIA’s 2017 pioneering and still-relevant Artificial Intelligence — Considerations for the Profession of Internal Auditing was supplemented in 2023 by The Artificial Intelligence Revolution (Part 1: Understanding, Adopting, and Adapting to AI; Part 2: Revisiting The IIA’s Artificial Intelligence Framework). ISACA’s guidance includes 2020’s Auditing Guidelines for Artificial Intelligence and 2018’s Auditing Artificial Intelligence. Returning to our analogy, the latter suggests early cloud audits as a useful frame of reference for AI auditing (e.g., focusing on in-place controls and business/IT governance aspects).

Bridging the Gap Between AI and Humans

AI is not trying to replace you. Indeed, most internal auditors are confident the role will always require a human touch: in the AuditBoard-Chartered IIA report, less than 3% of internal audit leaders were “very concerned” advanced AI could replace them. ChatGPT itself says we’ll always be needed.

AI is simply offering a toolkit and better starting point, freeing you up to spend more time on proactive strategy, risk management, and high-impact decision-making. We see AI as a capacity multiplier and magnifier for human intelligence. As AI automates routine tasks and surfaces insights from ever larger pools of data, human judgment and expertise is critical for understanding nuance, formulating opinions, and developing actionable recommendations.

The challenge and opportunity is to harmonize human acumen and judgment with AI’s capabilities in intelligent automation, connecting risk data, and simplifying processes. That’s the purpose behind AuditBoard’s AI-powered transformation: Secure, intuitive AuditBoard AI will elevate every user of our platform with intelligent insights and automation driven by a range of audit, risk, compliance, operational, and external data sources. But it’s the humans who’ll build on its insights, make decisions, and develop forward-looking strategies and recommendations.

An AI-Powered Call to Action

Internal auditors who embrace innovation have more impact. In Richard Chambers’ book Agents of Change, he assessed that the most powerful change agents in our profession are inherently innovative, deploying innovative solutions to transform internal audit itself.

AI will have an acute impact on the future of work, and generative AI could prove to be the ultimate capacity multiplier. We should be supporting our organizations in making the most of it, not getting in their way. Make sure you’re educating yourself, exploring opportunities, asking questions, and making AI part of your strategic plan for 2024 and beyond.

About the authors

Richard Chambers, CIA, CRMA, CFE, CGAP, is the CEO of Richard F. Chambers & Associates, a global advisory firm for internal audit professionals, and also serves as Senior Advisor, Risk and Audit at AuditBoard. Previously, he served for over a decade as the president and CEO of The Institute of Internal Auditors (IIA). Connect with Richard on LinkedIn.

Anand Bhakta is Sr. Director of Risk Solutions at AuditBoard and a cofounder and Principal of SAS. He has over twenty years of audit and advisory experience. Anand spent 8 years at Ernst & Young prior to SAS, and has served as a trusted advisor for numerous internal audit and management executives. Connect with Anand on LinkedIn.

You may also like to read

Audit reporting best practices: Guide for audit leaders

Latest data on AI adoption reinforces need for internal auditors’ “superpowers”

AuditBoard and IAF report: The more you know about AI-enabled fraud, the better equipped you are to fight it

Audit reporting best practices: Guide for audit leaders

Latest data on AI adoption reinforces need for internal auditors’ “superpowers”

Discover why industry leaders choose AuditBoard

SCHEDULE A DEMO